Internship by Esteban Mateos, in cooperation with Polytech Sorbonne, within Sorbonne University in Paris, France.

Abstract

This report presents the findings and outcomes of my internship, focusing on the feasibility of real-time change detection using a 3D reference model and drone-captured videos. The objective was to rapidly identify changes in the environment, such as fallen trees or suspicious objects.

During the familiarization phase, extensive research was conducted on existing algorithms and feature detection and matching techniques. This involved studying various approaches to identify keypoints in images, which were then used for matching and generating differences between images.

The internship involved working with drone-captured videos, a 3D model generated through photogrammetry, and telemetry data. The alignment of the 3D model and video frames enabled the creation of an image database for further analysis. Challenges, such as geographic offsets and manual corrections, were encountered during this process.

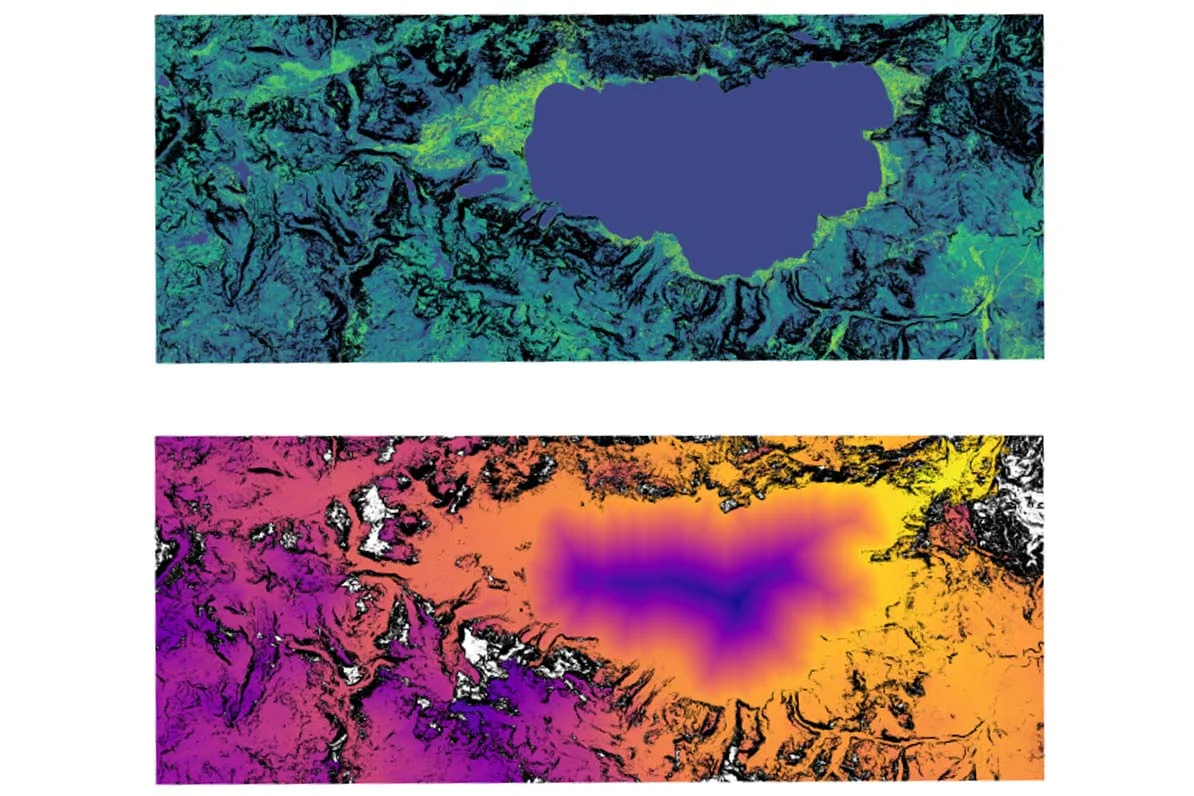

To address the limitations of existing algorithms, the Segment Anything Model (SAM) was explored. SAM, a promptable segmentation model, provided high-quality object masks and flexible segmentation based on specific prompts. The SAM model was utilized to identify and compare objects in the images.

The comparison process involved masking, sorting masks based on area and color, and determining differences between the reference image and video frames. Results were visually presented, highlighting the detected differences on an interactive 3D map.

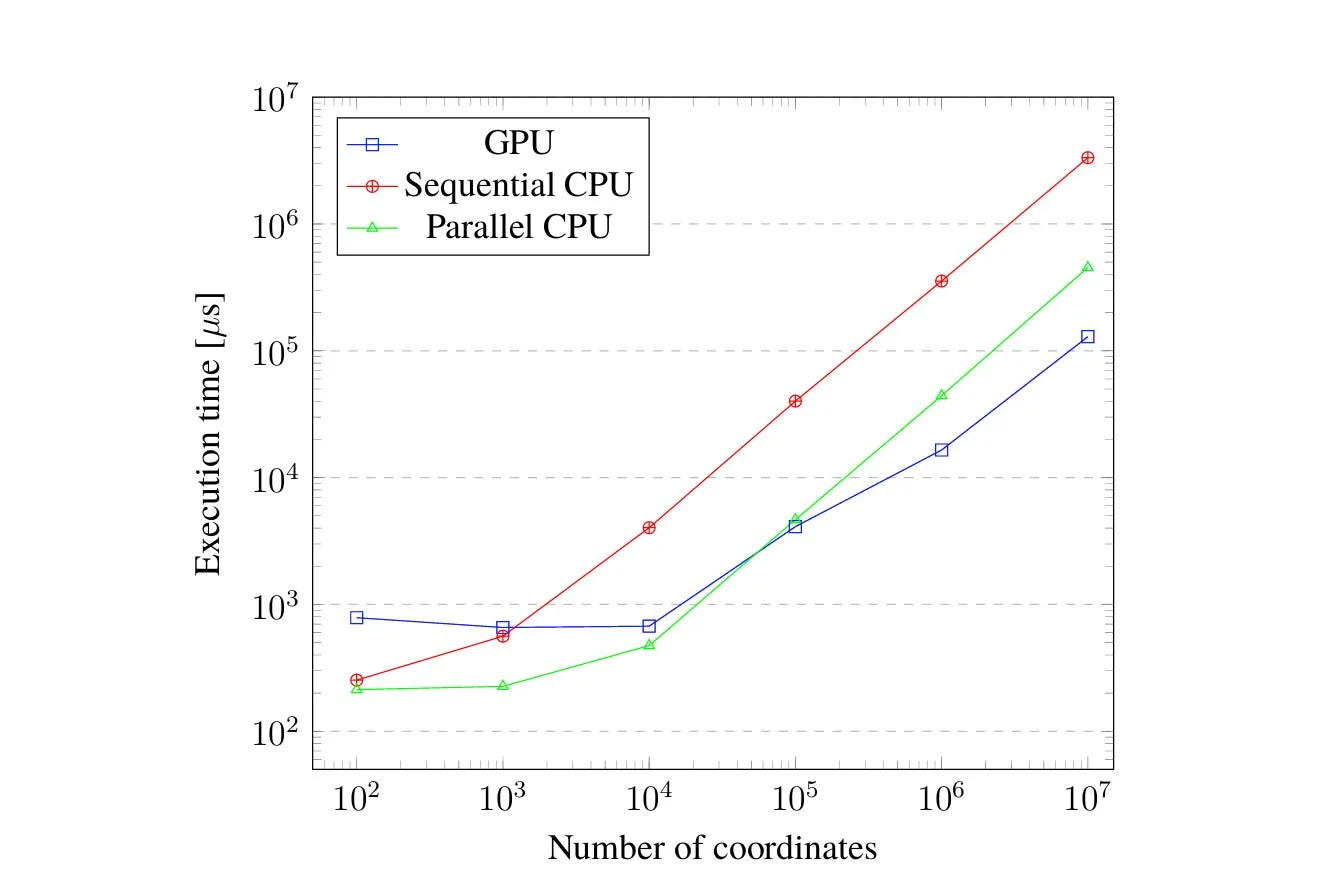

Throughout the internship, various challenges were encountered, including image differences, a unique perspective, limited datasets, and software utilization. Collaboration and knowledge-sharing within the company played a crucial role in overcoming these challenges. Furthermore, GPU computing challenges were faced due to the resource-intensive nature of the SAM model. Limitations in accessing CUDA on the personal computer resulted in time losses during mask generation. The computational demands of SAM made it impractical to embed the solution in a drone, necessitating a division of tasks between onboard real-time image generation and comparison on a more powerful system.

In conclusion, the internship provided valuable insights into real-time change detection using 3D models and drone-captured videos. The study highlighted the effectiveness of the SAM model for promptable segmentation. The findings contribute to understanding image comparison techniques

and the challenges associated with utilizing big machine learning models in resource-constrained environments.