Early detection of forest fires using deep learning

Master’s Thesis by Daniel Posch and Jesper Rask, in cooperation with Chalmers University of Technology in Gothenburg, Sweden.

Background

An increasing wildfire risk is a reality for a big part of the world. Warmer temperatures and drier conditions are the major contributors. The key to control a fire, is to quickly locate the affected area, before it reaches an uncontrollable state.

As a consequence, researchers have shown an increased interest in a solution to a reliable early fire detection. Fire departments need an effective solution to locate and map the possible fires in an area.

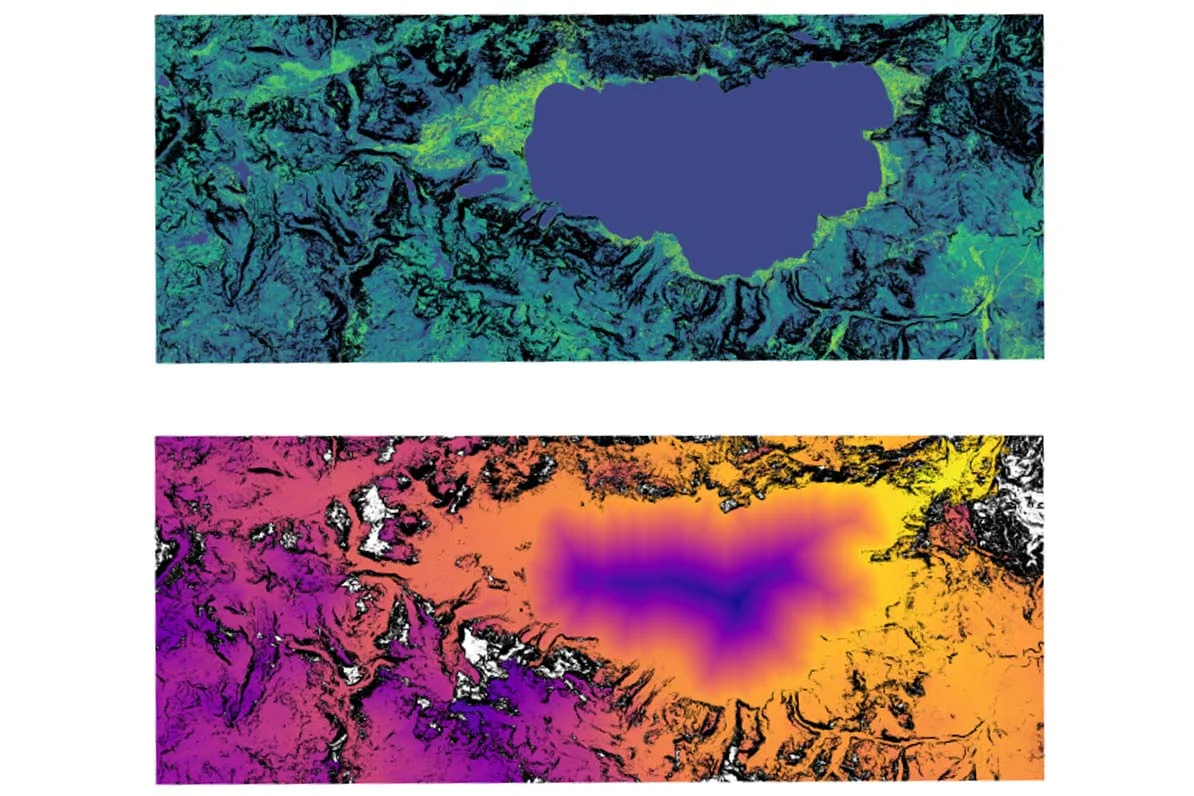

By introducing aerial fire detecting units, the fires could quickly be located and suppressed.

Convolutional neural networks are the current state of the art technique regarding image classification. This thesis explores the possibilities of extracting fire features by trained models, with the purpose of improving the accuracy and detection speed of the traditional approaches.

By evaluating known CNN architectures such as VGG16, FireNet, DenseNet and InceptionV3 using transfer learning, a fire detection system was created. These architectures were applied by training models in different modalities, namely thermal and RGB. A multimodal approach to this problem is suggested, where the most accurate thermal and RGB model are combined together in a late fusion fashion.

By implementing several architectures, the evaluation of a single stream network and multimodal architecture became possible. The results clearly indicate that a multimodal approach could increase the performance of the model, compared to traditional CNN approaches.

The multimodal architecture achieved a TPR of 100% and FPR of 0% while the best single streamed CNN reached a TPR of 97% and a FPR of 0,02%. The models were evaluated on a testset composed of 300 images taken from a surveying drone, that is independent from the train- and validationset.